Saturday 2026-03-07

06:00 AM

Anthropic’s Statement To The ‘Department Of War’ Reads Like A Hostage Note Written In Business Casual [Techdirt]

We’ve been covering the ongoing saga of the Trump administration’s attempt to destroy Anthropic for the sin of having modest ethical guidelines around its AI technology.

The short version: Anthropic said it didn’t want its AI making autonomous kill decisions without human oversight. Defense Secretary Pete Hegseth responded by declaring the company a supply chain risk—a designation designed for foreign adversaries, not San Francisco companies with ethics policies—and ordering every federal agency to purge Anthropic’s technology. Now Anthropic is back at the negotiating table with the same people who just tried to kill it.

On Thursday, Anthropic CEO Dario Amodei published a new statement about “where things stand” with the Defense Department. And it is… something. It reads like what happens when a serious person at a serious company has to write a serious document in an environment that has gone completely insane—and the result is a press release that, under any previous administration, would have been recognized as deeply alarming corporate groveling, but which now just kind of… slides into the news cycle as another Thursday.

The statement is titled “Where things stand with the Department of War.” Not the Department of Defense. The Department of War. Yes, Trump and Hegseth have spent hundreds of millions of dollars renaming the Defense Department, but it’s not up to them. It’s up to Congress. According to the law, it’s still the Department of Defense, and anyone using the name Department of War is clearly sucking up to the administration. It’s all theater.

Amodei uses the fictitious name throughout his statement. Every single reference. “Department of War.” This is a company that six days ago was being praised for standing on principle, and its CEO can’t even bring himself to use the department’s legal name because the administration insists upon everyone using the cosplay version. Before you even get to the substance, the document has already bent the knee. He’s negotiating with people who branded him a national security threat, and he opens by adopting their preferred terminology like a hostage reading a prepared script.

From there, the statement proceeds through a series of passages that are individually rational and collectively dystopian. Take this section:

I would like to reiterate that we had been having productive conversations with the Department of War over the last several days, both about ways we could serve the Department that adhere to our two narrow exceptions, and ways for us to ensure a smooth transition if that is not possible. As we wrote on Thursday, we are very proud of the work we have done together with the Department, supporting frontline warfighters with applications such as intelligence analysis, modeling and simulation, operational planning, cyber operations, and more.

“We are very proud of the work we have done together with the Department”—the department that is currently trying to destroy the company over a contractual dispute. The department whose secretary called Anthropic’s stance “a master class in arrogance and betrayal” and “a cowardly act of corporate virtue-signaling that places Silicon Valley ideology above American lives.” The department that declared Anthropic a supply chain risk to national security—again, a designation designed for hostile foreign infiltration of military systems, not for a San Francisco company that said “maybe a human should be in the loop before the robot decides to kill someone.”

And here’s Dario, proudly listing all the ways Anthropic has served these same people. “Supporting frontline warfighters.” This is the language of a Pentagon press release. Six days. It took six days to go from “we have principles about autonomous weapons” to “we are very proud of supporting frontline warfighters with cyber operations.”

This may be a rational decision from a company trying to stave off a ridiculous fight, but the real story is that they feel the need to act this way.

Then there’s the apology. Earlier this week, an internal Amodei memo leaked in which he described OpenAI’s rushed Pentagon deal as “safety theater” and “straight up lies,” and noted that the key difference between the two companies’ positions was that OpenAI “cared about placating employees” while Anthropic “actually cared about preventing abuses.” It was blunt. It was competitive. It also appeared to be accurate—OpenAI subsequently rewrote its contract to address many of the concerns Amodei identified.

But accuracy is apparently a liability now:

I also want to apologize directly for a post internal to the company that was leaked to the press yesterday. Anthropic did not leak this post nor direct anyone else to do so—it is not in our interest to escalate this situation. That particular post was written within a few hours of the President’s Truth Social post announcing Anthropic would be removed from all federal systems, the Secretary of War’s X post announcing the supply chain risk designation, and the announcement of a deal between the Pentagon and OpenAI, which even OpenAI later characterized as confusing. It was a difficult day for the company, and I apologize for the tone of the post. It does not reflect my careful or considered views. It was also written six days ago, and is an out-of-date assessment of the current situation.

He is apologizing for the tone of an accurate description of events because the accurate description made the people trying to destroy his company unhappy. He notes it was “a difficult day for the company”—the day the President of the United States directed every federal agency to cease using your technology and the Defense Secretary branded you a threat to national security. Yeah, I’d call that a difficult day. And on that difficult day, Amodei accurately described what was happening, and now he has to say sorry for it because the accurate description “does not reflect my careful or considered views.”

Translation: the careful and considered view is that you don’t say true things out loud when the administration is watching and deeply focused on punishing you.

And then we arrive at the closing:

Our most important priority right now is making sure that our warfighters and national security experts are not deprived of important tools in the middle of major combat operations. Anthropic will provide our models to the Department of War and national security community, at nominal cost and with continuing support from our engineers, for as long as is necessary to make that transition, and for as long as we are permitted to do so.

Anthropic is offering to provide its AI models to the military at nominal cost—essentially a discount—while simultaneously preparing to challenge the supply chain risk designation in court. The company is saying: “We believe your action against us is illegal, we will fight it in court, and also here’s our technology at a steep discount, please don’t hurt us anymore.”

And the framing: “Our most important priority right now is making sure that our warfighters… are not deprived of important tools in the middle of major combat operations.” This is Anthropic fully adopting Hegseth’s rhetoric—the exact framing that was used to justify the attack on them in the first place. Hegseth’s entire argument was that Anthropic’s ethical guidelines were depriving “warfighters” of critical tools. And now Anthropic is echoing that language as though it were their own concern all along. The “warfighters” language is especially rich given that this administration keeps tap dancing around the question of whether we’re actually “at war” with Iran—apparently we have warfighters who aren’t fighting a war.

The statement closes with what might be the single most remarkable sentence:

Anthropic has much more in common with the Department of War than we have differences. We both are committed to advancing US national security and defending the American people, and agree on the urgency of applying AI across the government. All our future decisions will flow from that shared premise.

Remember, this company was founded by people who left OpenAI specifically because they thought AI safety was being treated as an afterthought. Their entire brand, their entire reason for existing, was the proposition that there are some things AI should not be used for without significant guardrails. “Anthropic has much more in common with the Department of War than we have differences” is the kind of sentence you write when survival has replaced principle as the operating framework.

Every individual decision in this statement is probably the rational play. Using the administration’s preferred name costs nothing. Apologizing for the memo reduces friction. “Warfighter” language signals alignment. These are survival tactics, and they’re being deployed by someone who appears to have no good options.

That’s the actual horror. This is what the “good” decisions look like in an authoritarian world.

Under any previous administration—Democrat or Republican—a company telling the Defense Department “we’d prefer our AI not make autonomous kill decisions without human oversight” would have been a mostly unremarkable negotiating position. It might have been a deal breaker for that particular contract. The two sides might have parted ways. What would not have happened is the Secretary of Defense going on social media to accuse the company of “betrayal” and “duplicity,” the President directing all federal agencies to stop using the company’s products, and the company’s CEO subsequently having to write a public groveling statement apologizing for having accurately described the situation while pledging free labor to the government that attacked him.

And every AI company watching this—every tech company of any kind—is absorbing the lesson. Tell the administration “no” on even the most modest ethical point, and this is what follows: a week of chaos, a supply chain risk designation, your CEO apologizing for telling the truth, and a press release pledging your technology to the military at cost while you simultaneously sue to stay alive.

As I wrote last year, authoritarian systems are fundamentally incompatible with innovation. They produce exactly this kind of environment—one where the rational move for a company is to grovel in public while fighting in court, to adopt the language of the people attacking you, and to apologize for having been right. The AI bros who supported Trump because Biden’s AI plan involved some annoying paperwork should take a long look at this statement and ask themselves whether this is the “pro-innovation” environment they were promised.

Because right now, the most “pro-innovation” thing happening in American AI is a hostage note written in business casual—and everyone pretending it’s just a press release.

Kanji of the Day: 刀 [Kanji of the Day]

刀

✍2

小2

sword, saber, knife

トウ

かたな そり

太刀 (たち) — long sword (esp. the tachi, worn on the hip edge down by samurai)

日本刀 (にっぽんとう) — Japanese sword (usu. single-edged and curved)

刀鍛冶 (かたなかじ) — swordsmith

太刀打ち (たちうち) — crossing swords

執刀 (しっとう) — performing a surgical operation

宝刀 (ほうとう) — treasured sword

長刀 (ちょうとう) — long sword

二刀流 (にとうりゅう) — liking both alcohol and sweets

木刀 (きがたな) — wooden sword

竹刀 (しない) — bamboo sword (for kendo)

Generated with kanjioftheday by Douglas Perkins.

Kanji of the Day: 凶 [Kanji of the Day]

凶

✍4

中学

villain, evil, bad luck, disaster

キョウ

凶悪 (きょうあく) — atrocious

凶器 (きょうき) — dangerous weapon

凶暴 (きょうぼう) — ferocious

元凶 (げんきょう) — ringleader

凶悪犯罪 (きょうあくはんざい) — atrocious crime

凶行 (きょうこう) — violence

吉凶 (きっきょう) — good or bad luck

豊凶 (ほうきょう) — rich or poor harvest

凶弾 (きょうだん) — assassin's bullet

凶作 (きょうさく) — bad harvest

Generated with kanjioftheday by Douglas Perkins.

04:00 AM

Daily Deal: The Ultimate Microsoft Office Professional 2021 for Windows License + Windows 11 Pro Bundle [Techdirt]

Microsoft Office 2021 Professional is the perfect choice for any professional who needs to handle data and documents. It comes with many new features that will make you more productive in every stage of development, whether it’s processing paperwork or creating presentations from scratch – whatever your needs are. Office Pro comes with MS Word, Excel, PowerPoint, Outlook, Teams, OneNote, Publisher, and Access. Microsoft Windows 11 Pro is exactly that. This operating system is designed with the modern professional in mind. Whether you are a developer who needs a secure platform, an artist seeking a seamless experience, or an entrepreneur needing to stay connected effortlessly, Windows 11 Pro is your solution. The Ultimate Microsoft Office Professional 2021 for Windows + Windows 11 Pro Bundle is on sale for $49.97 for a limited time.

Note: The Techdirt Deals Store is powered and curated by StackCommerce. A portion of all sales from Techdirt Deals helps support Techdirt. The products featured do not reflect endorsements by our editorial team.

Oregon Federal Judge Says ICE’s Warrantless Arrests Are Illegal [Techdirt]

ICE has been telling itself all it needs to do is write its own paperwork and it can do whatever it wants. Memos — passed around secretively and publicly acknowledged by no one but whistleblowers — told ICE agents they don’t need judicial warrants to arrest people or enter people’s homes.

All they need — according to acting director Todd Lyons, who issued the memos — is paperwork they could create and authorize without any need to seek the approval of anyone else. ICE calls them warrants but they’re just self-issued paperwork in which the officer says a person needs to be arrested and then signs it. That’s it. The review process begins and ends at the same desk. If the agent swears it to be true, he’s only swearing it to himself, which means every finger can be crossed and every “fact” can be fiction.

Courts aren’t having it. ICE’s internal memos may claim there’s no need for the Constitution to come between them and their mass deportation efforts, but that doesn’t mean the Constitution agrees to be sidelined. The courts are stepping in with increasing frequency to protect constitutional rights. A lot of activity in recent months has focused on the due process rights being denied to detainees.

More recent activity is focusing on the Fourth Amendment which, if violated, naturally lends itself to other rights violations. Via Kyle Cheney of Politico (who has been tracking these cases since Trump’s most recent election) comes another case where a federal judge refuses to play along with ICE’s unconstitutional game of charades.

The opening paragraph of this opinion [PDF] lays out the facts. And they are ugly.

ICE officers are casting dragnets over Oregon towns they believe to be home to agricultural workers, calling them “target rich.” Landing in those communities, officers surveil apartment complexes in the early morning hours, scan license plates for details about the vehicles’ owners, and wait for them to get into their vehicles. Officers then stop, arrest, detain and transport people out of the District of Oregon to the Northwest ICE Processing Center (“NWIPC”), 144 miles away in Tacoma, Washington, before ultimately deporting them. Sworn testimony and substantial evidence before this Court show that ICE officers ask few questions and allow little time before shattering windows, handcuffing people, and detaining them at an ICE facility in another state.

There’s no “worst of the worst” going on here. These are the actions of masked opportunists who know the only way to make the boss happy is to value quantity over quality. Untargeted dragnets cannot possibly rely on probable cause, even considering Justice Kavanaugh’s blessing of racial profiling. Given this — and the administration’s desire to see 3,000 arrests per day — immigration officers can’t even be bothered to issue administrative warrants, much less secure judicial warrants, before performing arrests.

The Oregon courts drives home the point in the next paragraph (emphasis in the original):

The law on this issue is clear and undisputed. An ICE officer may arrest someone if the officer obtains in advance a warrant for their arrest. If the officer does not have a warrant, they cannot arrest someone unless they have probable cause to believe that both (1) the individual is in the United States unlawfully and (2) they are “likely to escape before a warrant can be obtained.”

The government’s response to this could be generously called “implausible.” It’s more accurately “risible” and backed by absolutely nothing that can’t be immediately contradicted by literally everything everywhere, as the court points out.

Plaintiffs challenge ICE’s practice of abusing its arrest power by failing to meet those

criteria before arresting, detaining, and deporting people. Defendants do not—and could not— argue that this practice is lawful. Rather, they argue that there is no such practice, and that the

myriad cases presented to this Court are mere coincidence.

But there is “such practice.” It’s impossible to deny it, even though the government tried to. The court isn’t interested in the government’s deflections and straight-up lies. It’s here to compare the facts to the law. Here are the facts:

[T]he overwhelming evidence in this record confirms that ICE officers targeted Woodburn and other cities in Oregon because of the large number of agricultural workers living in those areas. Officer testimony regarding human smuggling serves only as an inappropriate pretextual reason for developing reasonable suspicion for a stop. That officer also testified that he believed the van was suspicious because it had tinted windows and did not have any commercial markings.

When asked what gave the officers “reasonable suspicion that there may have been a crime afoot or that the folks in the van may not have had legal status,” the officer noted that the registered owner of the van had an immigration history, and that “[p]eople are being — going into a van early in the morning.” The officers did not have the identities of anyone in the van and they were not pursuing any known targets.The officers did not have a warrant for M-J-M-A-’s arrest.

Here’s more:

The evidence also demonstrates ICE’s practice of fabricating warrants after arrests were made. Tr. 306 (if an officer “encountered a file that did not have a warrant for arrest, an I-200,” he would create one); Tr. 356 (officer affirming that “for any case” involving a warrantless arrest, he would “create a warrant for the arrest after” individuals were detained at ICE field offices). This practice of creating warrants after the fact is highly probative of ICE’s failure to make individualized determinations of one’s escape risk prior to arresting them. That is especially true where, as in M-J-M-A-’s case, the encounter narratives for arrestees were exactly “the same.” Tr. 401.

Heading towards the granting of requested restraining order, the court makes it explicitly clear that federal immigration officers are routinely violating constitutional rights:

The Court finds that ample evidence in this case demonstrates a high likelihood—if not a certainty—that Defendants are engaging in a pattern and practice of unlawful conduct in Oregon…

And if it’s unlawful in Oregon, it’s illegal everywhere in the United States. Nothing in this order relies on Oregon’s state Constitution. Everything here falls under the minimum standard set by the US Constitution and its amendments.

The order ends with a stark warning — one that makes it clear what’s happening now is not only extremely abnormal, but a threat to the Republic itself.

It is clear that there are countless more people who have been rounded up, and who either remain in detention or have “voluntarily” deported than those, like M-J-M-A-, who were fortunate enough to find counsel at the eleventh hour. Defendants benefit from this blitz approach to immigration enforcement that takes advantage of navigating outside of the boundaries of conducting lawful arrests. For the one detainee who has the audacity to challenge the legality of her detention and gains release, several more remain detained or succumb to the threat of lengthy detention, and then instead “voluntarily” deport. Defendants win the numbers game at the cost of debasing the rule of law.

Finally, this Court has previously described ICE officers’ field enforcement conduct as brutal and violent. The practices are intended to strike fear across large numbers of people throughout Oregon. The persistent intensity of regular ICE immigration enforcement operations may very well have the intended effect of normalizing this level of violence. If this normalization continues, then even greater harm will be inflicted.

This is all much larger than the individuals who have somehow managed to challenge this administration’s deportation activities. This is only where it begins. If the courts can’t get this shut down, this rot will be deliberately spread to cover anyone who isn’t sufficiently deferential to the authoritarians ensconced in the GOP.

12:00 AM

That’s what studies are for [Seth Godin's Blog on marketing, tribes and respect]

“Are you sure it’s going to work?”

That’s the wrong question to consider when proposing a study.

It’s also not helpful to say, “It’s unlikely to solve the problem.”

All the likely approaches have already been tried.

The useful steps are:

- Is there a problem worth solving?

- Is the expense of this test reasonable?

- Will the study cause significant damage?

- Of all the things we can test, is this a sensible one to try next?

Our fear of failure is real. It’s often so significant that we’d rather live with a problem than face the possibility that our new approach might be wrong.

If the problem is worth solving, it’s probably worth the effort and risk that the next unproven test will require.

[In this podcast, Dr. Jonathan Sackner-Bernstein talks with some patients and a doctor about his novel approach to Parkinson’s disease. Participants in the conversation bring up the conventional wisdom he’s challenging and share reasons why his theory probably won’t work. But none of the critics has a better alternative. The cost of the test is relatively low, and the stakes of the problem are quite high. There’s no clear answer. This is precisely what a study is for.]

What will it cost to test your solution to our problem? Okay, begin.

Friday 2026-03-06

11:00 PM

The Double Whammy Of The CBS, Warner Brothers Mergers Will Be A Layoff Nightmare [Techdirt]

You might recall that Paramount and CBS had only just started to lay off workers in the wake of the merger with David Ellison’s Skydance. Now, after Ellison (or more accurately his dad and the Saudis) dramatically overpaid for Warner Brothers ($111 billion plus numerous incentives), the overall debt load at the company is so massive, it could make past Warner Brothers chaos seem somewhat charming:

“The deal is tied up with so much debt that it virtually guarantees layoffs the likes of which Hollywood hasn’t seen before. That’s going to mean far less output from the suite of properties under Paramount and Warner’s control. And it will mean that the production apocalypse which has been brewing since the pandemic, the end of Peak TV, and the contraction of runaway green lights for streaming networks will grow still more apocalyptic.”

The real world costs of this kind of pointless consolidation is always borne by consumers and labor. Executives get disproportionate compensation, tax breaks, and a brief stock bump. Workers get shitcanned and consumers get higher prices and shittier overall product in a bid to pay doubt debt. We have seen this happen over and over and over again in U.S. media. It’s not subtle or up for debate.

Keep in mind Warner Brothers has seen nothing but this kind of operational chaos over the last two decades as it bounced between pointless mergers with AOL, AT&T, and Discovery, all of which promised vast synergies and new innovation, but instead resulted in oceans of layoffs, higher prices, and consistently shittier product.

Now comes the granddaddy deal of them all to try and cement Larry Ellison’s obvious desire to try and dominate what’s left of U.S. media. Run by his son David, whose operational judgement (if Bari Weiss’ start at CBS is any indication) is arguably worse than all the terrible, fail upward, trust-fund brunchlord types that preceded him.

All of the debt from past deals just keeps piling up and being kicked down the road in a lazy, pseudo-innovative shell game (and this doesn’t; include CBS!):

“In its initial $30-a-share bid for Warner Bros., Paramount was financing the purchase with up to $84 billion in pro forma debt. That has now risen to $31 a share, tacking on roughly another $2.5 billion, plus a “ticking fee” of 25 cents per share per quarter for every quarter the deal doesn’t close after September 30 of this year. Paramount is also paying Netflix’s breakup fee of $2.8 billion. Paramount has not released the financing details for the new deal, but it’s likely to be an even higher debt load.”

Ellison is pretty broadly also leveraged in the AI investment hype cycle, and if that bubble pops (or pops worse, as the case may be), this entire gambit could go wrong very, very quickly. Even the ongoing Saudi cash infusions may not be enough to save them. Larry Ellison’s nepobaby son will of course be fine; the employees, consumers, and broader U.S. media market, not so much.

06:00 PM

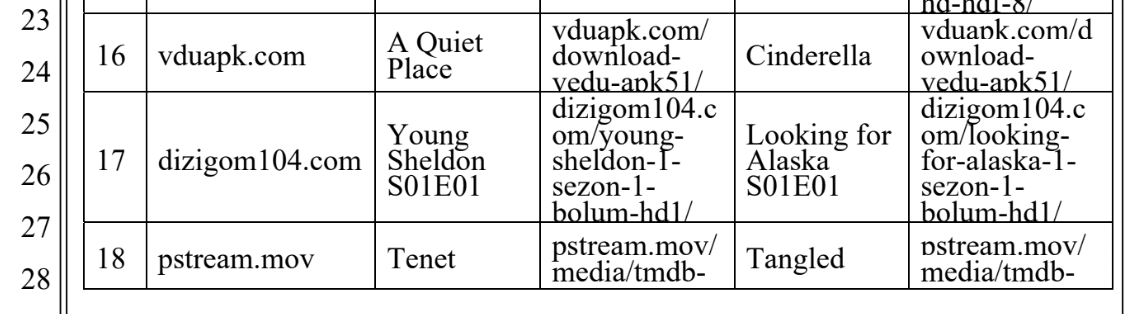

Pirate Streaming Portal ‘P-Stream’ Shuts Down Following ACE/MPA Pressure [TorrentFreak]

Last month, we reported on a new push from the Motion Picture Association and the ACE anti-piracy alliance, hoping to identify several pirate site operators.

Last month, we reported on a new push from the Motion Picture Association and the ACE anti-piracy alliance, hoping to identify several pirate site operators.

They obtained DMCA subpoenas at a California federal court, requiring Discord and Cloudflare to share all personal information they have on customers associated with domains such as hdfull.org, sflix.fi, and pstream.mov.

ACE has used these subpoenas as an intelligence-gathering tool for years. While these efforts are often fruitless, as many site owners use fake data, they occasionally have some effect. That’s also true for the latest round, which has motivated P-Stream to shut down permanently.

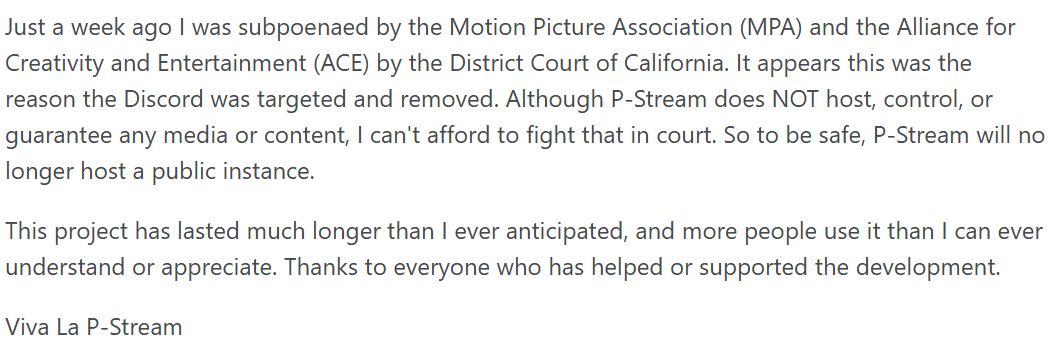

P-Stream Shuts Down

A few hours ago, P-stream’s operator, Pas, informed TorrentFreak that they decided to shut down the website effective immediately. This decision is a direct result of the DMCA subpoena and the added legal pressure, which previously resulted in the loss of the Discord server as well.

People who try to access the site’s official domain are now redirected to a shutdown message. Pas stresses that P-Stream never hosted any infringing material, but the operator can’t afford to mount a legal defense if it came to that.

“Although P-Stream does NOT host, control, or guarantee any media or content, I can’t afford to fight that in court. So to be safe, P-Stream will no longer host a public instance,” the operator writes.

While the operator regrets the shutdown, Pas also mentions that the project was life-consuming and took its toll, so the decision to throw in the towel could be a healthy one on that front too.

Code Remains Public

P-Stream was launched in April 2024, when movie-web was shut down by legal pressure from Hollywood. It eventually grew into a popular project of its own with close to an estimated ten million visits last month.

However, two years after its predecessor’s demise, history is repeating, perhaps in more ways than we now know.

The P-Stream project was largely based on sudo-flix, which itself was a successor to the original movie-web code. Today, the (alleged) P-Stream code remains available as well, through publicly available GitHub repositories. Whether these repos are controlled by the site’s operator is unknown.

As always, there will likely be people who try to keep the project going, and once they become popular enough, these projects will come on Hollywood’s radar, repeating the same process.

From: TF, for the latest news on copyright battles, piracy and more.

03:00 PM

Trump Administration Using Gross Video Game Footage To Cheerlead Its War Efforts [Techdirt]

We should all know by now that this iteration of the Trump administration absolutely loves using pop culture imagery, including that of video games, to help message its horrible policies. Want to gloat about ICE terrorizing American cities and generally pissing everyone off when they’re not too busy perforating innocents? Let’s use images from Pokémon and Halo! Want to celebrate the destruction of American health thanks to RFK Jr. being in charge of it? Time to whip together a Stardew Valley meme!

It’s gross, of course. Wrapping these pop culture images around fascism, particularly where real deaths have been a result, is nauseating.

But if you want to make this absolutely as disgusting as possible, you need only to use video game footage to gloat about the body count America is racking up in its war/non-war with Iran.

On March 4, the official White House Twitter account posted a roughly one-minute-long video featuring numerous clips of real military strikes against different Iranian locations and targets. At the very start of the video is a clip from 2023’s Modern Warfare III that shows a player activating an MGB killstreak. This is a hidden killstreak for players who get 30 kills without dying. Once called, the bomb ends the match. The official video was posted with the caption: “Courtesy of the Red, White & Blue.”

This is disgusting. Using video game footage to gloat about the Iranian body count is simply sick. Set aside what you think about this war. Set aside whether you think this administration has any fucking clue what it is doing and what will come next once it’s done dropping its bombs. Set aside the open question of what our goals actually are here, whether we’re going to see American troops on the ground in Iran, or whether this will end up as another American quagmire in the Middle East. None of that is the point here.

This isn’t a fucking game. It’s war, no matter how hard the president and the Republicans in Congress want to pretend otherwise so that they don’t have to do their damned jobs. War is a very serious matter, a sentence that never should need to be written in the first place. Eschewing that level of seriousness by treating this like it’s some kind of a video game and we’re all just trying to earn trophies and badges for our kill counts is fucking sick.

Particularly when you consider that this gloating includes over 1200 Iranian deaths, including 175 schoolgirls that committed the crime of trying to learn.

IRAN: At least 1,230 people killed, including 175 schoolgirls and staff killed in a missile strike on a primary school in Minab in the country’s south on the war’s first day, according to the non-profit humanitarian group Iranian Red Crescent Society. It was unclear if the overall death toll included Islamic Revolutionary Guard Corps military casualties.

Here’s an image of the mass graves Iran says it dug in order to put all of those children to their final rest.

I wonder, are those girls included in the body count to get the White House its Xbox achievement?

War is not a game. Treating it like a video game shows that these are deeply unserious people that are not only running our government, but currently prosecuting a war that they don’t want to call a war. The naked cruelty of it all, rather than treating the enemy and, more importantly, the American people with respect, is horrifying.

That they’re doing it in our name, all the more so.

12:00 PM

Pluralistic: Blowtorching the frog (05 Mar 2026) executive-dysfunction [Pluralistic: Daily links from Cory Doctorow]

->->->->->->->->->->->->->->->->->->->->->->->->->->->->->

Top Sources:

None

-->

Today's links

- Blowtorching the frog: If I must have enemies, let them be impatient ones.

- Hey look at this: Delights to delectate.

- Object permanence: Bill Cosby v Waxy; Rodney King, 20 years on; Peter Watts v flesh-eating bacteria; American authoritarianism; Algebra II v Statistics for Citizenship; Ideas lying around; Banksy x Russian graffists; TSA v hand luggage; Hack your Sodastream; There were always enshittifiers.

- Upcoming appearances: Where to find me.

- Recent appearances: Where I've been.

- Latest books: You keep readin' em, I'll keep writin' 'em.

- Upcoming books: Like I said, I'll keep writin' 'em.

- Colophon: All the rest.

Blowtorching the frog (permalink)

Back in 2018, the Singletrack blog published a widely read article explaining the lethal trigonometry of a UK intersection where drivers kept hitting cyclists:

There are lots of intersections that are dangerous for cyclists, of course, but what made Ipsley Cross so lethal was a kind of eldritch geometry that let the cyclist and the driver see each other a long time before the collision, while also providing the illusion that they were not going to collide, until an instant before the crash.

This intersection is an illustration of a phenomenon called "constant bearing, decreasing range," which (the article notes) had long been understood by sailors as a reason that ships often collide. I'm not going to get into the trigonometry here (the Singletrack article does a great job of laying it out).

I am, however, going to use this as a metaphor: there is a kind of collision that is almost always fatal because its severity isn't apparent until it is too late to avert the crash. Anyone who's been filled with existential horror at the looming climate emergency can certainly relate.

The metaphor isn't exact. "Constant bearing, decreasing range" is the result of an optical illusion that makes it seem like things are fine right up until they aren't. Our failure to come to grips with the climate emergency is (partly‡) caused by a different cognitive flaw: the fact that we struggle to perceive the absolute magnitude of a series of slow, small changes.

‡The other part being the corrupting influence of corporate money in politics, obviously

This is the phenomenon that's invoked in the parable of "boiling a frog." Supposedly, if you put a frog in a pot of water at a comfortable temperature and then slowly warm the water to boiling, the frog will happily swim about even as it is cooked alive. In this metaphor, the frog can only perceive relative changes, so all that it senses is that the water has gotten a little warmer, and a small change in temperature isn't anything to worry about, right? The fact that the absolute change to the water is lethal does not register for our (hypothetical) frog.

Now, as it happens, frogs will totally leap clear of a pot of warming water when it reaches a certain temperature, irrespective of how slowly the temperature rises. But the metaphor persists, because while it does not describe the behavior of frogs in a gradually worsening situation, it absolutely describes how humans respond to small, adverse changes in our environment.

Take moral compromises: most of us set out to be good people, but reality demands small compromises to our ethics. So we make a small ethical compromise, and then before long, circumstances demand another compromise, and then another, and another, and another. Taken in toto, these compromises represent a severe fall from our personal standards, but so long as they are dripped out in slow and small increments, too often we rationalize our way into them: each one is only a small compromise, after all:

https://pluralistic.net/2020/02/19/pluralist-19-feb-2020/#thinkdifferent

Back to the climate emergency: for the first 25 years after NASA's James Hansen testified before Congress about "global heating," the changes to our world were mostly incremental: droughts got a little worse, as did floods. We had a few more hurricanes. Ski seasons got shorter. Heat waves got longer. Taken individually, each of these changes was small enough for our collective consciousness to absorb as within the bounds of normalcy, or, at worst, just a small worsening. Sure, there could be a collision on the horizon, but it wasn't anything urgent enough to justify the massive effort of decarbonizing our energy and transportation:

https://locusmag.com/feature/cory-doctorow-the-swerve/

It's not that we're deliberately committing civilizational suicide, it's just that slow-moving problems are hard to confront, especially in a world replete with fast-moving, urgent problems.

But crises precipitate change:

https://www.youtube.com/watch?v=FrEdbKwivCI

Before 2022, Europe was doing no better than the rest of the world when it came to confronting the climate emergency. Its energy mix was still dominated by fossil fuels, despite the increasing tempo of wildfires and floods and the rolling political crises touched off by waves of climate refugees. These were all dire and terrifying, but they were incremental, a drip-drip-drip of bad and worsening news.

Then Putin invaded Ukraine, and the EU turned its back on Russian gas and oil. Overnight, Europe was plunged into an urgent energy crisis, confronted with the very real possibility that millions of Europeans would shortly find themselves shivering in the dark – and not just for a few nights, but for the long-foreseeable future.

At that moment, the slow-moving crisis of the climate became the Putin emergency. The fossil fuel industry – one of the most powerful and corrupting influences in Brussels and around the world – was sidelined. Europe raced to solarize. In three short years, the continent went from decades behind on its climate goals to a decade ahead on them:

https://pluralistic.net/2025/10/11/cyber-rights-now/#better-late-than-never

Putin could have continued to stage minor incursions on Ukraine, none of them crossing any hard geopolitical red lines, and Europe would likely have continued to rationalize its way into continuing its reliance on Russia's hydrocarbon exports. But Putin lacked the patience to continue nibbling away at Ukraine. He tried to gobble it all down at once, and then everything changed.

There is a sense, then, in which Putin's impatient aggression was a feature, not a bug. But for Putin's lack of executive function, Ukraine might still be in danger of being devoured by Russia, but without Europe taking any meaningful steps to come to its aid – and Europe's solar transition would still be decades behind schedule.

Enshittification is one of those drip-drip-drip phenomena, too. Platform bosses have a keen appreciation of how much value we deliver to one another – community, support, mutual aid, care – and they know that so long as we love each other more than we hate the people who own the platforms, we'll likely stay glued to them. Mark Zuckerberg is a master of "twiddling" the knobs on the back-ends of his platforms, announcing big, enshittifying changes, and then backing off on them to a level that's shittier than it used to be, but not as shitty as he'd threatened:

https://pluralistic.net/2023/02/19/twiddler/

Zuck is a colossal asshole, a man who founded his empire in a Harvard dorm room to nonconsensually rate the fuckability of his fellow undergrads, a man who knowingly abetted a genocide, a man who cheats at Settlers of Catan:

https://pluralistic.net/2025/04/23/zuckerstreisand/#zdgaf

But despite all these disqualifying personality defects, Mark Zuckerberg has one virtue that puts him ahead of his social media competitor Elon Musk: Zuck has a rudimentary executive function, and so he is capable of backing down (sometimes, temporarily) from his shittiest ideas.

Contrast that with Musk's management of Twitter. Musk invaded Twitter the same year Putin invaded Ukraine, and embarked upon a string of absolutely unhinged and incontinent enshittificatory gambits that lacked any subtlety or discretion. Musk didn't boil the frog – he took one of his flamethrowers to it.

Millions of people were motivated to hop out of Musk's Twitter pot. But millions more – including me – found ourselves mired there. It wasn't that we liked Musk's Twitter, but we had more reasons to stay than we had to go. For me, the fact that I'd amassed half a million followers since some old pals messaged me to say they'd started a new service called "Twitter" meant that leaving would come at a high price to my activism and my publishing career.

But Musk kept giving me reasons to reassess my decision to stay. Very early into the Musk regime, I asked my sysadmin Ken Snider to investigate setting up a Bluesky server that I could move to. I was already very active on Mastodon, which is designed to be impossible to enshittify the way Musk had done to Twitter, because you can always move from one Fediverse server to another if the management turns shitty:

https://pluralistic.net/2022/12/23/semipermeable-membranes/

But for years, Bluesky's promise of federation remained just that – a promise. Technically, its architecture dangled the promise of multiple, independent Bluesky servers, but practically, there was no way to set this up:

https://pluralistic.net/2023/08/06/fool-me-twice-we-dont-get-fooled-again/

But – to Bluesky's credit – they eventually figured it out, and published the tools and instructions to set up your own Bluesky servers. Ken checked into it, and told me that it was all do-able, but not until a planned hardware upgrade to the Linux box he keeps in a colo cage in Toronto was complete. That upgrade happened a couple months ago, and yesterday, Ken let me know that he'd finished setting up a Bluesky server, just for me. So now I'm on Bluesky, at @doctorow.pluralistic.net:

https://bsky.app/profile/doctorow.pluralistic.net

I am on Bluesky, the service, but I am not a user of Bluesky, the company. That means that I'm able to interact with Bluesky users without clicking through Bluesky's abominable terms of service, through which you permanently surrender your right to sue the company (even if you later quit Bluesky and join another server!):

Remember: I knew and trusted the Twitter founders and I still got screwed. It's not enough for the people who run a service to be good people – they also have to take steps to insulate themselves (and their successors) from the kind of drip-drip-drip rationalizations that turn a series of small ethical waivers into a cumulative avalanche of pure wickedness:

https://pluralistic.net/2024/12/14/fire-exits/#graceful-failure-modes

Bluesky's "binding arbitration waiver" does the exact opposite: rather than insulating Bluesky's management from their own future selves' impulse to do wrong, a binding arbitration waiver permanently insulates Bluesky from consequences if (when) they yield the temptation to harm their users.

But Bluesky's technical architecture offers a way to eat my cake and have it, too. By setting up a Bluesky (the service) account on a non-Bluesky (the company) server, I can join a social space that has lots of people I like, and lots of interesting technical innovations, like composable moderation, without submitting to the company's unacceptable terms of service:

https://bsky.social/about/blog/4-13-2023-moderation

If Twitter was on the same slow enshittification drip-drip-drip of the pre-Musk years, I might have set up on Bluesky and stayed on Twitter. But thanks to Musk and his frog blowtorch, I'm able to make a break. For years now, I have posted this notice to Twitter nearly every day:

Twitter gets worse every single day. Someday it will degrade beyond the point of usability. The Fediverse is our best hope for an enshittification-resistant alternative. I'm @pluralistic@mamot.fr.

Today, I am posting a modified version, which adds:

If you'd like to follow me on Bluesky, I'm @doctorow.pluralistic.net. This is the last thread I will post to Twitter.

Crises precipitate change. All things being equal, the world would be a better place without Vladimir Putin or Elon Musk or Donald Trump in it. But these incontinent, impatient, terrible men do have a use: they transform slow-moving crises that are too gradual to galvanize action into emergencies that can't be ignored. Putin pushed the EU to break with fossil fuels. Musk pushed millions into federated social media. Trump is ushering in a post-American internet:

https://pluralistic.net/2026/01/01/39c3/#the-new-coalition

If you're reading this on Twitter, this is the long-promised notice that I'm done here. See you on the Fediverse, see you on Bluesky – see you in a world of enshittification-resistant social media.

It's been fun, until it wasn't.

Hey look at this (permalink)

- mctuscan heaven https://www.tumblr.com/mcmansionhell/809937203073581056/mctuscan-heaven

-

What's a Panama? https://catvalente.substack.com/p/whats-a-panama

-

The AI Bubble Is An Information War https://www.wheresyoured.at/the-ai-bubble-is-an-information-war/

-

The Ticketmaster Monopoly Trial Starts https://www.bigtechontrial.com/p/the-ticketmaster-monopoly-trial-starts

-

HyperCard Changed Everything https://www.youtube.com/watch?v=hxHkNToXga8

Object permanence (permalink)

#20yrsago Waxy threatened with a lawsuit by Bill Cosby over “House of Cosbys” vids https://waxy.org/2006/03/litigation_cosb/

#15yrsago Proposed TX law would criminalize TSA screening procedures https://blog.tenthamendmentcenter.com/2011/03/texas-legislation-proposes-felony-charges-for-tsa-agents/

#15yrsago Rodney King: 20 years of citizen photojournalism https://mediactive.com/2011/03/02/rodney-king-and-the-rise-of-the-citizen-photojournalist/

#15yrsago Mobile “bandwidth hogs” are just ahead of the curve https://tech.slashdot.org/story/11/03/02/2027209/High-Bandwidth-Users-Are-Just-Early-Adopters

#15yrsago Peter Watts blogs from near-death experience with flesh-eating bacteria https://www.rifters.com/crawl/?category_name=flesh-eating-fest-11

#15yrsago How a HarperCollins library book looks after 26 checkouts (pretty good!) https://www.youtube.com/watch?v=Je90XRRrruM

#15yrsago Banksy bails out Russian graffiti artists https://memex.craphound.com/2011/03/04/banksy-bails-out-russian-graffiti-artists/

#15yrsago TSA wants hand-luggage fee to pay for extra screening due to checked luggage fees https://web.archive.org/web/20110308142316/https://hosted.ap.org/dynamic/stories/U/US_TSA_BAGGAGE_FEES?SITE=AP&SECTION=HOME&TEMPLATE=DEFAULT&CTIME=2011-03-03-16-50-03

#15yrsago US house prices fall to 1890s levels (where they usually are) https://www.csmonitor.com/Business/Paper-Economy/2011/0303/Home-prices-falling-to-level-of-1890s

#10yrsago Whuffie would be a terrible currency https://locusmag.com/feature/cory-doctorow-wealth-inequality-is-even-worse-in-reputation-economies/

#10yrsago Ditch your overpriced Sodastream canisters in favor of refillable CO2 tanks https://www.wired.com/2016/03/sodamod/

#10yrsago Why the First Amendment means that the FBI can’t force Apple to write and sign code https://www.eff.org/files/2016/03/03/16cm10sp_eff_apple_v_fbi_amicus_court_stamped.pdf

#10yrsago Apple vs FBI: The privacy disaster is inevitable, but we can prevent the catastrophe https://www.theguardian.com/technology/2016/mar/04/privacy-apple-fbi-encryption-surveillance

#10yrsago The 2010 election was the most important one in American history https://www.youtube.com/watch?v=fw41BDhI_K8

#10yrsago As Apple fights the FBI tooth and nail, Amazon drops Kindle encryption https://web.archive.org/web/20160304055204/https://motherboard.vice.com/read/amazon-removes-device-encryption-fire-os-kindle-phones-and-tablets

#10yrsago Understanding American authoritarianism https://web.archive.org/web/20160301224922/https://www.vox.com/2016/3/1/11127424/trump-authoritarianism

#10yrsago Proposal: replace Algebra II and Calculus with “Statistics for Citizenship” https://web.archive.org/web/20190310081625/https://slate.com/human-interest/2016/03/algebra-ii-has-to-go.html

#10yrsago Panorama: the largest photo ever made of NYC https://360gigapixels.com/nyc-skyline-photo-panorama/

#1yrago Ideas Lying Around https://pluralistic.net/2025/03/03/friedmanite/#oil-crisis-two-point-oh

#1yrago There Were Always Enshittifiers https://pluralistic.net/2025/03/04/object-permanence/#picks-and-shovels

Upcoming appearances (permalink)

- San Francisco: Launch for Cindy Cohn's "Privacy's Defender" (City Lights), Mar 10

https://citylights.com/events/cindy-cohn-launch-party-for-privacys-defender/ -

Barcelona: Enshittification with Simona Levi/Xnet (Llibreria Finestres), Mar 20

https://www.llibreriafinestres.com/evento/cory-doctorow/ -

Berkeley: Bioneers keynote, Mar 27

https://conference.bioneers.org/ -

Montreal: Bronfman Lecture (McGill) Apr 10

https://www.eventbrite.ca/e/artificial-intelligence-the-ultimate-disrupter-tickets-1982706623885 -

London: Resisting Big Tech Empires (LSBU)

https://www.tickettailor.com/events/globaljusticenow/2042691 -

Berlin: Re:publica, May 18-20

https://re-publica.com/de/news/rp26-sprecher-cory-doctorow -

Berlin: Enshittification at Otherland Books, May 19

https://www.otherland-berlin.de/de/event-details/cory-doctorow.html -

Hay-on-Wye: HowTheLightGetsIn, May 22-25

https://howthelightgetsin.org/festivals/hay/big-ideas-2

Recent appearances (permalink)

- Tanner Humanities Lecture (U Utah)

https://www.youtube.com/watch?v=i6Yf1nSyekI -

The Lost Cause

https://streets.mn/2026/03/02/book-club-the-lost-cause/ -

Should Democrats Make A Nuremberg Caucus? (Make It Make Sense)

https://www.youtube.com/watch?v=MWxKrnNfrlo -

Making The Internet Suck Less (Thinking With Mitch Joel)

https://www.sixpixels.com/podcast/archives/making-the-internet-suck-less-with-cory-doctorow-twmj-1024/ -

Panopticon :3 (Trashfuture)

https://www.patreon.com/posts/panopticon-3-150395435

Latest books (permalink)

- "Canny Valley": A limited edition collection of the collages I create for Pluralistic, self-published, September 2025 https://pluralistic.net/2025/09/04/illustrious/#chairman-bruce

-

"Enshittification: Why Everything Suddenly Got Worse and What to Do About It," Farrar, Straus, Giroux, October 7 2025

https://us.macmillan.com/books/9780374619329/enshittification/ -

"Picks and Shovels": a sequel to "Red Team Blues," about the heroic era of the PC, Tor Books (US), Head of Zeus (UK), February 2025 (https://us.macmillan.com/books/9781250865908/picksandshovels).

-

"The Bezzle": a sequel to "Red Team Blues," about prison-tech and other grifts, Tor Books (US), Head of Zeus (UK), February 2024 (thebezzle.org).

-

"The Lost Cause:" a solarpunk novel of hope in the climate emergency, Tor Books (US), Head of Zeus (UK), November 2023 (http://lost-cause.org).

-

"The Internet Con": A nonfiction book about interoperability and Big Tech (Verso) September 2023 (http://seizethemeansofcomputation.org). Signed copies at Book Soup (https://www.booksoup.com/book/9781804291245).

-

"Red Team Blues": "A grabby, compulsive thriller that will leave you knowing more about how the world works than you did before." Tor Books http://redteamblues.com.

-

"Chokepoint Capitalism: How to Beat Big Tech, Tame Big Content, and Get Artists Paid, with Rebecca Giblin", on how to unrig the markets for creative labor, Beacon Press/Scribe 2022 https://chokepointcapitalism.com

Upcoming books (permalink)

- "The Reverse-Centaur's Guide to AI," a short book about being a better AI critic, Farrar, Straus and Giroux, June 2026

-

"Enshittification, Why Everything Suddenly Got Worse and What to Do About It" (the graphic novel), Firstsecond, 2026

-

"The Post-American Internet," a geopolitical sequel of sorts to Enshittification, Farrar, Straus and Giroux, 2027

-

"Unauthorized Bread": a middle-grades graphic novel adapted from my novella about refugees, toasters and DRM, FirstSecond, 2027

-

"The Memex Method," Farrar, Straus, Giroux, 2027

Colophon (permalink)

Today's top sources:

Currently writing: "The Post-American Internet," a sequel to "Enshittification," about the better world the rest of us get to have now that Trump has torched America (1066 words today, 43341 total)

- "The Reverse Centaur's Guide to AI," a short book for Farrar, Straus and Giroux about being an effective AI critic. LEGAL REVIEW AND COPYEDIT COMPLETE.

-

"The Post-American Internet," a short book about internet policy in the age of Trumpism. PLANNING.

-

A Little Brother short story about DIY insulin PLANNING

This work – excluding any serialized fiction – is licensed under a Creative Commons Attribution 4.0 license. That means you can use it any way you like, including commercially, provided that you attribute it to me, Cory Doctorow, and include a link to pluralistic.net.

https://creativecommons.org/licenses/by/4.0/

Quotations and images are not included in this license; they are included either under a limitation or exception to copyright, or on the basis of a separate license. Please exercise caution.

How to get Pluralistic:

Blog (no ads, tracking, or data-collection):

Newsletter (no ads, tracking, or data-collection):

https://pluralistic.net/plura-list

Mastodon (no ads, tracking, or data-collection):

Bluesky (no ads, possible tracking and data-collection):

https://bsky.app/profile/doctorow.pluralistic.net

Medium (no ads, paywalled):

https://doctorow.medium.com/

https://twitter.com/doctorow

Tumblr (mass-scale, unrestricted, third-party surveillance and advertising):

https://mostlysignssomeportents.tumblr.com/tagged/pluralistic

"When life gives you SARS, you make sarsaparilla" -Joey "Accordion Guy" DeVilla

READ CAREFULLY: By reading this, you agree, on behalf of your employer, to release me from all obligations and waivers arising from any and all NON-NEGOTIATED agreements, licenses, terms-of-service, shrinkwrap, clickwrap, browsewrap, confidentiality, non-disclosure, non-compete and acceptable use policies ("BOGUS AGREEMENTS") that I have entered into with your employer, its partners, licensors, agents and assigns, in perpetuity, without prejudice to my ongoing rights and privileges. You further represent that you have the authority to release me from any BOGUS AGREEMENTS on behalf of your employer.

ISSN: 3066-764X

All In on Texas [The Status Kuo]

I’m writing today in The Big Picture about Tuesday’s primary election in Texas—the “big prize” state that both parties have their eyes on.

On the Democratic side, it wasn’t a policy fight so much as a question of style. James Talarico ran on faith, unity and anti-corporate populism, while Jasmine Crockett came out swinging as the anti-Trump firebrand. Talarico won, but there’s a racial gap he’ll need to close. That said, he’s also made promising inroads with independents and Latino voters. The eye-popping numbers tell a fascinating story.

On the GOP side, Sen. John Cornyn and Texas AG Ken Paxton, a far-right extremist, both failed to reach a majority due to a spoiler candidate. That means a runoff at the end of May. Expect things to get ugly, and for Trump to play an outsize role—for better or worse for that party.

Texas is a big long-run prize because of its 40 Electoral College votes—possibly 45 after 2030. If Republicans maintain their hold there, Democrats will have to run the table elsewhere to win the White House. Talarico says the moment to take back Texas is now, and that he’s the standard bearer. Republicans, including Trump, are clearly worried. I lay this out, including some very interesting results, in today’s piece.

If you’re a subscriber, look in your inboxes this afternoon. If you’re not separately subscribed to The Big Picture, sign up below. While my own column there is always free and without any paywall, we appreciate our voluntary paid supporters immensely! The team is offering 20% off your first year, giving you full access to all the content:

See you this afternoon, and then back here tomorrow with my regular column.

Jay

10:00 AM

Ctrl-Alt-Speech: The (Content Moderation) Eras Tour [Techdirt]

Ctrl-Alt-Speech is a weekly podcast about the latest news in online speech, from Mike Masnick and Everything in Moderation‘s Ben Whitelaw.

Subscribe now on Apple Podcasts, Overcast, Spotify, Pocket Casts, YouTube, or your podcast app of choice — or go straight to the RSS feed.

In a special episode of Ctrl-Alt-Speech, Ben and Mike discuss (with apologies to Tay-Tay) the three eras of content moderation in the media and what comes next.

Their conversation builds on Ben’s essay in the soon-to-be-published Trust, Safety, and the Internet We Share Multistakeholder Insights, a new book looking at the evolution of the Trust & Safety industry and how platform policies decisions are made. The pre-print is available online.

Together, they unpack three distinct phases: The Strange Fascination Era (2003–2015), when newsrooms powered platform growth and treated social media as an exciting new frontier; The “We’re Watching You” Era (2016–2020), when investigative reporting exposed online harms and pushed platforms to formalise Trust & Safety; and The Mask Off Era (2021–present), as platforms retreat from working with the media and the commitment to moderation waned.

We’ll be back next week with our regular episode.

09:00 AM

OpenAI Rewrites Contract, Anthropic Returns to Negotiate—The Chaos Continues [Techdirt]

In less than a week, the Pentagon blacklisted an AI company for having ethics, declared it a supply chain risk, watched its preferred replacement face a massive user revolt, and then sat down to amend the replacement’s contract to address the very concerns the blacklisted company had been raising all along. Meanwhile, the blacklisted company is reportedly back in negotiations with the same Pentagon that tried to destroy it, because—wouldn’t you know—its models are apparently better for what the military actually needs.

On Monday night, Sam Altman posted on X that OpenAI had amended its Defense Department agreement to include new language explicitly addressing domestic surveillance:

We have been working with the DoW to make some additions in our agreement to make our principles very clear.

1. We are going to amend our deal to add this language, in addition to everything else:

“Consistent with applicable laws, including the Fourth Amendment to the United States Constitution, National Security Act of 1947, FISA Act of 1978, the AI system shall not be intentionally used for domestic surveillance of U.S. persons and nationals.

For the avoidance of doubt, the Department understands this limitation to prohibit deliberate tracking, surveillance, or monitoring of U.S. persons or nationals, including through the procurement or use of commercially acquired personal or identifiable information.”

Is this better than the original contract language we flagged earlier this week? Probably! The explicit mention of “commercially acquired personal or identifiable information” is new and addresses the exact data type—geolocation, browsing history, the stuff data brokers sell about all of us—that reportedly was the final sticking point in the Anthropic negotiations. The language about “deliberate tracking, surveillance, or monitoring” is more concrete than the original contract’s vague reference to “unconstrained monitoring.”

Altman also noted that the Defense Department “affirmed that our services will not be used by Department of War intelligence agencies (for example, the NSA)” and that any such use “would require a follow-on modification to our contract.”

This sounds better than where they were before, but it’s genuinely hard to tell from the outside. And that difficulty—the opaque nature of what any of this means in practice—is the actual story here.

Because the problem with OpenAI’s deal was never just about the specific contract language. As we laid out earlier this week, the intelligence community has spent decades engineering legal definitions that let it conduct what any reasonable person would call mass surveillance while truthfully claiming otherwise. Whether this new amendment survives contact with those definitions is a question no outside observer can answer right now.

The bigger issue is happens to innovation when the rules can change based on a cabinet secretary’s mood. The contract still references compliance with existing legal authorities—the same authorities that have been stretched and reinterpreted for years to permit exactly the kinds of data collection the new language purports to prohibit.

Anthropic’s Dario Amodei was characteristically blunt about the gap between OpenAI’s public framing and what the contract language actually delivers. In a memo to staff that has since leaked:

“The main reason [OpenAI] accepted [the DoD’s deal] and we did not is that they cared about placating employees, and we actually cared about preventing abuses.”

Damn.

He called OpenAI’s messaging around the deal “straight up lies” and described the whole thing as “safety theater.” You can dismiss some of that as competitive sniping, but Amodei was in the room for the Anthropic negotiations, and his characterization of what the Pentagon was actually demanding lines up with what the New York Times separately reported. His criticism is specific and technical: the Pentagon asked Anthropic to delete a “specific phrase about ‘analysis of bulk acquired data'” that was “the single line in the contract that exactly matched this scenario we were most worried about.” OpenAI’s original contract conspicuously lacked any such language. The amendment addresses this, at least on its face. Whether it does so in a way that actually binds the Pentagon’s behavior is a different question.

But the contract language debate, as important as it is, obscures the much larger problem.

Look at what happened at OpenAI’s all-hands meeting on Tuesday. According to a partial transcript reviewed by CNBC, Altman told his employees this:

“So maybe you think the Iran strike was good and the Venezuela invasion was bad…. You don’t get to weigh in on that.”

That’s the CEO of one of the most important AI companies on the planet telling his workforce that operational decisions about how their technology gets used in military actions are entirely up to Defense Secretary Pete Hegseth. The same Pete Hegseth who, just days earlier, tried to nuke an entire company for asking that AI not make autonomous kill decisions. The same Hegseth whose idea of contract negotiation was to issue what we described earlier this week as a “corporate death penalty” against Anthropic.

Speaking of Anthropic, that situation has gone from tragedy to farce and back again. The Financial Times reports that Amodei is now in direct talks with Emil Michael, a Hegseth lackey, to try to salvage a deal. This is the same Emil Michael (a scandal-ridden former Uber exec) who, just last week, called Amodei a “liar” with a “God complex”. And the same Defense Department that designated Anthropic a supply chain risk. The same administration that directed every federal agency to “immediately cease” all use of Anthropic’s technology.

And yet here they are, back at the table. Because, as multiple reports have made clear, Anthropic’s Claude models were already deployed on the Pentagon’s classified network and were quite useful for the Defense Department. The Pentagon apparently needs Anthropic’s technology because it’s actually good at the job. This just highlights how monumentally stupid the whole “supply chain risk” gambit was. You don’t issue a corporate death penalty against a company whose product you’re actively relying on for military operations unless you’re operating on pure spite rather than strategy.

The public, meanwhile, is making its own calculations under this cloud of uncertainty. ChatGPT uninstalls spiked 295% the day after the OpenAI deal was announced, while downloads dropped significantly. Anthropic’s Claude app jumped to the top of the App Store. One-star reviews of ChatGPT surged nearly 775% over the weekend.

Users who have zero ability to evaluate the legal intricacies of EO 12333 or the practical significance of “commercially acquired personal or identifiable information” are making choices based on the clear understanding that something has gone seriously wrong.

Call it the uncertainty tax: when users can’t verify whether a company’s principles are real, they treat visible conflict with authority as proof of authenticity. When people can’t tell whether a company’s safety commitments are real, they default to the company that got punished for having safety commitments—because at least that tells you that there were at least some principles at play.

Getting punished for having principles is, perversely, the clearest indication that you had any, whether or not it’s true.

Altman himself seems to recognize that the rollout was a disaster. From his post:

One thing I think I did wrong: we shouldn’t have rushed to get this out on Friday. The issues are super complex, and demand clear communication. We were genuinely trying to de-escalate things and avoid a much worse outcome, but I think it just looked opportunistic and sloppy.

“Looked” opportunistic is doing a lot of work in that sentence. But okay.

The deeper issue here goes beyond any one contract or any one company. What we’ve watched unfold over the past week is a case study in why you cannot build a functional technology industry under a petulant, arbitrary authoritarian regime.

This is now what every AI company knows: if you tell the government “no” on something—even something as basic as “our AI shouldn’t make autonomous kill decisions without human oversight”—the Defense Secretary may try to destroy your company, publicly call you treasonous, and bar anyone doing business with the military from working with you. If you tell the government “yes,” you may face a massive consumer backlash, lose hundreds of thousands of users, and find yourself amending contracts on the fly to address concerns you should have thought about before signing.

Seems like a rough way to encourage innovation in the AI space.

And the rules can change at any moment. This week it’s “give us unrestricted access for all lawful purposes.” Next week, the definition of “lawful” might shift. The week after that, maybe the administration decides it doesn’t like something else about your company and the threats start anew. Altman told his employees that Hegseth made clear OpenAI doesn’t “get to make operational decisions.” So the company writes the safety stack, crosses its fingers, and hopes the people who just tried to destroy its largest competitor over basic ethical commitments will honor the contract language.

This is the environment the AI industry’s biggest Trump boosters created for themselves. For months, the refrain on certain VC bro podcasts was that the Biden administration was going to destroy AI and hand the industry to China. In reality, Biden’s AI policy amounted to a toothless set of principles and some extra paperwork. It was annoying, sure. It did not involve the Defense Secretary threatening to obliterate companies or the president directing all federal agencies to stop using a specific American company’s technology.

And the irony of it all is that the market seems to be figuring this out even as the companies’ leadership teams scramble to pretend everything is fine. The same users who were happily using ChatGPT a week ago are fleeing to Claude—the product of the company the government tried to destroy—because they’ve correctly identified that a company that got punished for standing up to an authoritarian government is probably more trustworthy than one that rushed to fill the void.

Innovation requires predictability. It requires the ability to plan, to hire, to build product roadmaps that extend beyond next Friday’s presidential tweet. It requires knowing that if you build something good and compete fairly, the government won’t try to destroy you because you annoyed a cabinet secretary during contract negotiations. Every AI company—even the ones currently benefiting from Anthropic’s punishment—should be deeply unsettled by what happened last week.

Because the leopard that ate Anthropic’s face last Friday can eat yours next Friday. All it takes is one disagreement, one insufficiently sycophantic response, one moment of “duplicity” defined as “having principles.”

Altman seems to partially grasp this. He publicly stated that the decision to designate Anthropic as a supply chain risk was “a very bad decision” and that the Pentagon should offer Anthropic the same terms OpenAI agreed to. That’s the right thing to say when facing a PR crisis like this. But saying it while simultaneously benefiting from the decision, while telling your employees they don’t get to have opinions about how their technology gets used in military operations, sends a somewhat mixed signal.

The lesson here has less to do with the specifics of any contract than with the fact that an impetuous, arbitrary, out-of-control authoritarian government is bad for innovation. I mean, it’s also bad for the public, society, and (arguably) the military as well. The US has led in innovation for decades in part because we had stable institutions and predictable rule of law.

But hey, at least nobody’s asking them to fill out compliance forms anymore. That was the real threat to American AI leadership.

07:00 AM

Kristi Noem Misled Congress About Corey Lewandowski’s Role In DHS Contracts [Techdirt]

This story was originally published by ProPublica. Republished under a CC BY-NC-ND 3.0 license.

Homeland Security Secretary Kristi Noem misled Congress on Tuesday about the powers of her controversial top aide Corey Lewandowski, according to records reviewed by ProPublica and four current and former DHS officials.

Lewandowski has an unusual role at DHS, where he is not a paid government employee but is nonetheless acting as a top official, helping Noem run the sprawling agency. For months, members of Congress have asked the agency to detail the scope of his work and authority.

At a Senate Judiciary Committee hearing on Tuesday, Sen. Richard Blumenthal, D-Conn., asked Noem whether Lewandowski has “a role in approving contracts” at DHS. Noem responded with a flat denial: “No.”

But internal DHS records reviewed by ProPublica contradict Noem’s Senate testimony. The records show Lewandowski personally approved a multimillion-dollar equipment contract at the agency last summer.

That was not a one-off. Lewandowski has approved numerous contracts at DHS and often needs to sign off on large ones before any money goes out the door, the current and former department employees said.

Last year, Noem imposed a new policy that consolidated her and her top aides’ power over all spending at DHS, requiring that she personally review and approve all contracts above $100,000. Before the contracts reach Noem, they must be approved by a series of political appointees, who each sign or initial a checklist sometimes referred to internally as a routing sheet. Typically, the last name on the checklist before Noem’s is Lewandowski’s, the DHS officials said.

Under federal law, it is a crime to “knowingly and willfully” make a false statement to Congress. But in practice, it is rarely prosecuted.

In a statement, a DHS spokesperson reiterated Noem’s claim. “Mr. Lewandowski does NOT play a role in approving contracts,” the spokesperson said. “Mr. Lewandowski does not receive a salary or any federal government benefits. He volunteers his time to serve the American people.” Lewandowski did not respond to a request for comment.

Several news outlets, including Politico, have previously reported on aspects of Lewandowski’s involvement in contracting at DHS.

There have been widespread reports of delays caused by the new contract approval process at the agency, which has responsibilities spanning from immigration enforcement to disaster relief to airport security. DHS has asserted that the review process saved taxpayers billions of dollars.

A similar sign-off process exists for other policy decisions at DHS. One of the checklists, about rolling back protections for Haitians in the U.S., emerged in litigation last year. It featured the signatures of several top DHS advisers. Under them was Lewandowski’s signature, and then Noem’s.

Lewandowski is what’s known as a “special government employee,” a designation historically used to let experts serve in government for limited periods without having to give up their outside jobs. (At the beginning of the Trump administration, Elon Musk was one, too.) Special government employees have to abide by only some of the same ethics rules as normal officials and are permitted to have sources of outside income.

Lewandowski has declined to disclose whether he is being paid by any outside companies and, if so, who.

05:00 AM

Judges To AG Pam Bondi: It’s OK For The Gov’t To Dox People, But Not OK For People To Dox Gov’t Employees? [Techdirt]

Yet another one to add to the “it’s OK if we do it” file for the Trump administration. This administration is cool with censoring speech, nationalizing elections, seizing the means of production, and blackmailing law firms and universities. It would be heated AF if any other administration did these things, but since it’s the one doing it, it’s all cool and (probably not) legal.

Not a day goes by that its hypocrisy isn’t exposed. Here’s the latest, which certainly isn’t the last: the DOJ’s insistence that government employees be given preferential treatment in court.

Multiple bullshit prosecutions are underway, with AG Pam Bondi’s DOJ hoping to convert regular protest stuff into long-lasting federal felony charges. This hasn’t gone well for the DOJ, which tends to find itself rejected by grand juries when not getting its vindictive prosecutions tossed because they’ve been brought by prosecutors who don’t have legal claim to the positions they’re holding.

While the government continues to make social media hay by tweeting out wild allegations and the personal information of people who have yet to have their day in court, it simultaneously claims it should be illegal to identify federal officers and post their information to social media.

And while that’s just the government being hypocritical in terms of social media blasts, it’s engaging in another level of hypocrisy that’s not as easily dismissed. As Josh Gerstein reports for Politico, Attorney General Pam Bondi’s personal participation in this form of hypocrisy is not only inexcusable, but it’s also on the wrong side of the law.

Two federal judges have raised concerns about Attorney General Pam Bondi’s use of social media to publicize a wave of arrests last month of people charged with interfering with federal officers during an immigration enforcement surge in Minnesota.

When the government seeks protective orders to shield the details of cases from the public eye, the order applies to the government as much as it does to the defendants. But since Bondi can’t keep herself from scoring internet points on behalf of the Trump administration, she’ll be lucky to keep these particular prosecutions going.

That’s the upshot of this court order [PDF], handed down by Minnesota federal judge Dulce Foster:

As a threshold matter, the government’s claimed concern about the victim/agents’ “dignity and privacy” and the risk of doxxing is eyebrow-raising, to say the least. On January 28, 2026, at 12:53 p.m., Attorney General Pam Bondi publicly posted a tweet on X announcing, to a national audience, that Ms. Flores was arrested along with 15 other people as “rioters” who “have been resisting and impeding our law enforcement officers.” […] In publicly posting that information, the government failed to respect Ms. Flores’s dignity and privacy, exposed her to a risk of doxxing, and generally thumbed its nose at the notion that defendants are innocent until proven guilty. The post also directly violated a court order sealing the case (ECF No. 6), which was not lifted until the Court conducted initial appearances later that day (see ECF No. 7).

If the argument is that it’s dangerous for federal officers to be publicly identified but perfectly fine for random citizens to be exposed to threats of violence, the argument is deeply flawed. At worst, it’s the most powerful people arguing that the least powerful people should be exposed to the same sort of stuff they claim federal officers might be exposed to if their names are made public.

At best, it’s a tacit admission that more people are opposed to this administration’s actions than are opposed to the actions of those who engage in protests. If the DOJ really believed what the government is doing was good and supported by a majority of the public, it wouldn’t seek protective orders preventing the release of personal information.

But that’s not the case it made in court. And courts are now refusing to pretend the government is operating in good faith when it says some personal information is more equal than other personal information.

This determination was echoed in another court decision dealing with a Minneapolis-based prosecution:

At a hearing in a separate Minneapolis case last week, another magistrate judge, Shannon Elkins, directed prosecutors to “address whether the public posting of photographs violated the Court’s sealing order.” The government missed a deadline Tuesday to respond. Elkins later agreed to extend the deadline until Monday.